Bachelor Thesis (Aerospace Engineering)

Deep Learning for Automated Surface Quality Assessment in Robotic Grinding

Developing a robust dataset and evaluating machine learning viability for in-process quality control of metal components.

I. Introduction: Social Value & Objective

Automating the final grinding and finishing step in manufacturing processes is critical due to the rising challenge of finding skilled workers and the overall industry push toward AI and robotics. Current quality control relies on slow, contact-based tactile devices or expensive laser systems, limiting the feasibility of dynamic, in-process measurements.

The project objective was to create a preliminary dataset of labeled grinding images and evaluate different Machine Learning (ML) and Deep Learning (DL) approaches to establish a viable future direction for the ROBOTe lab's fully automatic robotic-arm manufacturing solution.

Workpiece Condition: Before & After Grinding

The workpiece before grinding (left, Rz ≈ 12.7 μm) and after 124 iterations (right, Rz ≈ 1.1 μm).

II. Methods: Data Acquisition & Processing

Experimental Setup & Acquisition

Grinding was performed on a C45 steel workpiece using the KUKA LBR iiwa 7 R800 robotic arm and a prototype EOAT. To ensure the final model was robust to real-world factory conditions, images were acquired under varied circumstances:

- Angles: Four sets of 3D-printed brackets were used to capture images at 30°, 45°, 60°, and 90° angles.

- Lighting: Seven unique lux configurations were applied to the 90° images to test model resilience against variable lighting conditions.

- Hardware: Three different cameras (Canon EOS 50, Allied Vision, Logitech C920) were used to create three separate datasets for comparison.

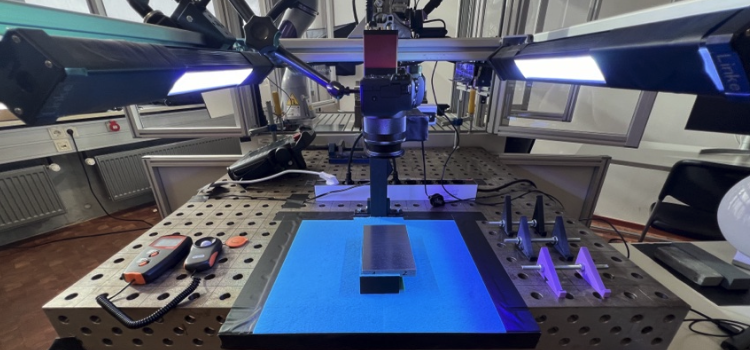

Experimental Setup for Image Acquisition

The experimental rig showing the KUKA arm's EOAT, light bars, and workpiece setup.

Data Processing Pipeline

To ensure high data quality, raw images were processed using a custom Python pipeline. This involved automated background cropping via an edge detection algorithm and extending the dataset size by cropping relevant regions up to 24 times per raw image.

For labeling, the highest roughness value (Rz) measured by the tactile device was used to ensure the resulting model was conservative—preferring unnecessary additional grinding over producing a faulty part.

III. Results & Evaluation

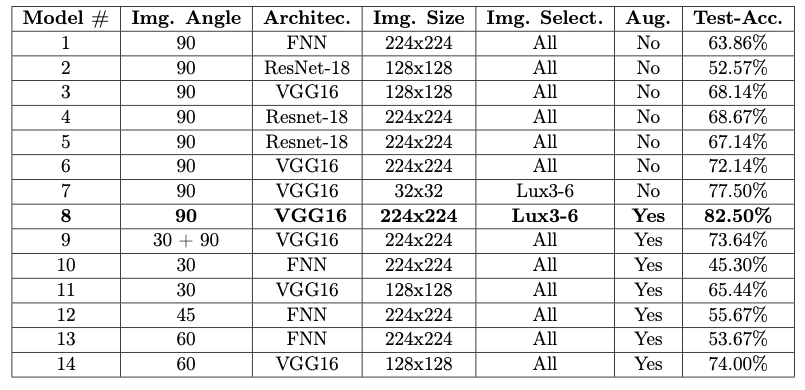

Classification Model Performance

Experiments with FNN, ResNet-18, and VGG16 Convolutional Neural Networks (CNN) focused on classification accuracy. The optimal settings involved using the VGG16 architecture combined with data augmentation and filtering out images from poor lux configurations.

Metrics Comparison: Baseline vs. VGG16 (Model 8)

Model 8 achieved significant improvements across all roughness classes (1–3 μm through 7+ μm) compared to the FNN baseline.

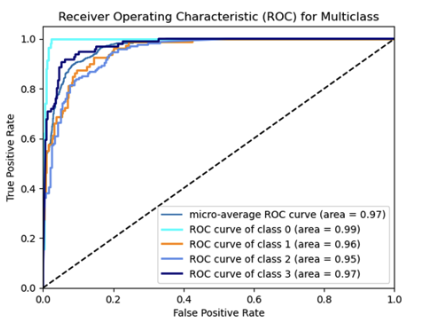

ROC Curves & AUC Evaluation

ROC curves showing Model 8's superior performance (Average AUC ≈ 0.97) compared to the baseline (Average AUC ≈ 0.85).

Key Evaluation Findings

- The best model (VGG16 Model 8) achieved a test accuracy of 82.50%, demonstrating the method's feasibility.

- A VGG16 regression model achieved a high-precision Mean Absolute Error (MAE) of 0.3328 μm in the 1–2 μm roughness range.

- The test accuracy for the 60° angled images was surprisingly high at 74.00%, suggesting viability for applications where 90° camera access is physically impossible.

IV. My Contribution & Role

As the sole researcher for this Bachelor Thesis, my role involved the design, implementation, execution, and analysis of the entire project:

- Experimental Design: Conceptualizing and designing the acquisition setup, including custom 3D-printed brackets for multi-angle image capture.

- Data Acquisition: Leading the 125 grinding iterations, acquiring the multi-angle and multi-lux image dataset, and conducting the physical tactile roughness measurements.

- Pipeline Development: Writing all Python scripts for data processing, including automated cropping, FNC generation, and label file creation.

- ML Implementation: Implementing, training, and analyzing all VGG16 and ResNet-18 classification and regression models.